Jamstack Reconsidered

This site is generated using Hugo. Getting the site up and running was nothing short of a nightmare, which I have documented elsewhere. (Apparently, I am not the only one who has trouble with Hugo: see how it fares, relative to the competition, in the 2022 Usage and Satisfaction Survey.) But things are working now, and Hugo makes adding new content very easy indeed.

It therefore seems a good opportunity to reflect back and revisit the whole “Static Site Generator” (SSG) a.k.a. “JamStack” topic from a greater distance.

Why a Static Site Generator?

I must admit that I turned towards SSGs mostly in order to have the design and webtech part of the site (responsive layout, JavaScript, CSS) taken care of. There are clearly people who know all about this sort of thing, but I am not one of them. At the same time, the idea of a static site was attractive: a personal web presence and blog does not need an application server. And not having to rely on Wordpress or a similar solution was also very appealing.

In the end, I did get what I was looking for, but it was more painful than I’d expected, and some of this was my fault.

For starters, I failed to realize that for a truly static site that is updated only rarely and consists only of a handful of pages, an SSG is overkill. For that, a fixed theme from a site like Templatemo, Free-CSS (free) or ThemeForest, Creative Market, TemplateMonster (commercial) is all that is required.

This is no longer quite true as soon as the content changes even occasionally (like on a blog), or if a large amount of existing content needs to be published (such as for a major documentation site). Manually fiddling content into a web template works for a handful of pages, but becomes tedious and error-prone pretty quickly.

In other words, using an SSG is likely a good choice:

- if the content is even moderately dynamic.

- if the content is too big to handle manually.

- if you need the meta-data (see the next section).

The static website model is more restrictive than I had anticipated. Static means static: there is no way to respond to user actions at all. I had underestimated how much, even on a personal site, some form of interactivity may be desirable, from comment functionality to Captchas for contact form submission. All this can be added; one should plan for it: the need may arise faster than expected.

Meta-Data Management

What I only slowly began to understand is how much functionality of an SSG is related to “meta-data management”. Things like finding pages by tag. Or by date. Creating sitemaps. Rewriting input file names to generate “clean” URLs (that is, URLs ending with a directory name, not a file with extension).

Strangely, this is an aspect of SSGs that is rarely talked about explicitly, although it is clearly fundamental to what they do. In fact, it is a major argument for using an SSG, even for a small, personal site: managing this information by hand is obviously not the right way to do it!

Unfortunately, SSGs don’t usually seem to make the information in the meta-data available externally. It would be useful to have it available for use in other tools. For example, it would be nice to be able to easily check all occurring tags, to make sure no spurious tags have been introduced via typos. Or to get a list of blog posts, by date, for use as an attachment to a CV. Any SSG collects all this information, but to my knowledge, it is not commonly made accessible to outside processing.

The Mapping Problem — Push vs Pull

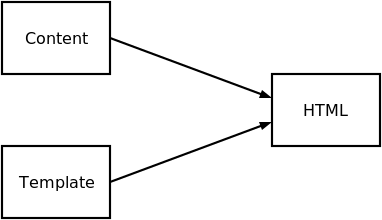

An SSG takes some content, applies a template, and produces HTML output. This seemingly innocuous process involves not one, but two mapping problems: each generated page in the final static site must know where its content comes from, and what template (or templates) to apply.

The question is how to accomplish this task most conveniently — convenient, that is, for the user. Maintaining a big, bulky configuration file with all the mappings is clearly not convenient, and neither is having to include processing instructions in each piece of content. In fact, the latter is worse, because now the configuration information is scattered about.

Hugo (and I believe most other commonly used SSGs) follow a push model.

In this processing model, raw content is placed into an appropriate position

in the source tree. The SSG picks it up from there and generates output

in the corresponding position in the public website hierarchy. The source

tree must therefore be organized like the desired output hierarchy. Hugo

finds an applicable template by also searching a template directory, selecting

the template that is located at the “closest” position to the piece of

content under consideration. For example, to style a piece of content in the

blog/ directory of the source tree, it will look for a template in the

blog/ directory of the template tree.

The advantage of this scheme is that it does not require explicit configuration at all: all the mappings are inferred from the positions of various artifacts in their respective hierarchies. On the other hand, the matching and mapping rules can be opaque, in particular when combined with partial templates and page components (menus, navbars, footers, etc). This scheme is also brittle with regards to non-content resources (images and stylesheets). It can be made to work, but it is all quite obscure and difficult to reason about.

An additional structural problem with the push model is the need for output pages that do not have a corresponding input; such pages are often created for meta-data. A page with links to all the posts matching a certain tag, for instance, does not show up in the raw content! The SSG generates it automatically, as part of its meta-data handling. But the spontaneous appearance of such pages is very confusing, because it is entirely driven by internal and invisible rules. Customizing such pages requires additional workarounds, because there is no obvious place to put the customization information (there is no corresponding raw content!).

An Alternative: Pull Processing

I believe that the underlying problem here is the choice of the push processing model itself. In the push model, the content drives the generation of output. I’d argue that it would be more natural to put the output in charge, and let each piece of output determine what content to include and which template to use. In other words, I am talking about a pull model of processing.

In a pull model, we start with the desired output, creating a placeholder stub for each page in the public site. This placeholder is empty, except that it specifies the content to display and the template to style it. The SSG then traverses the hierarchy of stubs, processes each, and replaces it with the generated HTML output.

Because the pull model does not rely on the location of the content in the source directory hierarchy, it is more flexible in regards to where the content is coming from: it might as well come from a database, or be generated by a program on demand. Moreover, the pull model allows to pull multiple pieces of content into a single output page — something that is difficult to do with the push model for a fixed number of page components, and impossible to do for arbitrary cardinalities.

The situation is somewhat comparable to the difference between an XML SAX parser (which implements a push model) and an XML pull parser. (Maintaining and processing a global mapping table could be compared to XML DOM processing.)

I might prepare a more detailed description and/or implementation of this concept in a future post. (Contact me if you are interested; I’d be interested to brainstorm this idea or collaborate on it.)

Supported Information Architecture

The discussion of the “pull model” already hints at an important question that is often overlooked: what kind of information architecture can an SSG support? In the push model, where the content drives the process flow, the information architecture is determined by the layout of the source tree — in other words, an information architecture that is not strictly hierarchical cannot be handled by the tool.

Hugo provides an example of this. Hugo assumes that all content is organized in a strictly tree-like hierarchy of directories and files, with directories mapping to “list” templates, and files mapping to “single page” templates. When considering a website as a representation of a file system, this does make sense; and many websites do, in fact, follow this architecture. Even a site like Amazon consists primarily of list and single-detail pages. But the comparison with Amazon also reveals just how limiting Hugo’s content model is: a single-detail page does not only contain information about one item, but also a list of other items (“Customers who bought also bought…"), as well as a list of reviews!

Hugo does not support such information architectures. It is not possible to display two items of content side-by-side on a single page, or to show several lists next to each other, or to show a combination of a list and an item. Hugo has no notion of independent blocks of content that are combined to form a page. Notice that this is not a limitation of the static-site-generation model. (Although they probably don’t, Amazon could pre-generate its single-details pages, including recommendations and reviews, updating them once a day.) Instead, it stems from Hugo’s expectation that the final website must map, directly, to a hierarchy of file system objects. In fairness, various workaround exist to add “widgets” to a page (such as a navbar, a footer, or a tag cloud), but each requires an ad-hoc mechanism for what is essentially a fixed element. There is no way to assemble an arbitrary collection of independent content elements onto a single page (to my knowledge).

Input Format

The most common input format for raw content seems to be Markdown. This is unfortunate, but there does not seem be a better alternative.

The problem with Markdown is that it is not expressive enough to capture the richness of HTML output. It does not even provide a way to specify color, for crying out loud! And of course no built-in way to indicate a CSS class specifier, either.

There are workarounds, but they all amount to monkey patching (such as embedded HTML). In fact, I believe that the existence of so many dialects of Markdown (unfortunate as this is in itself) is actually a consequence of its lack of expressiveness: just too much functionality is missing, forcing too many users to make ad-hoc additions.

Markdown essentially promulgates a vision of the Web, status circa 1994:

no color (everyone is still using black-and-white monitors), only links,

<i>, <b>, and <u> formatting, and <h1> through <h6> headings.

Extensibility and Themes

Customization is at the heart of site generation: everybody wants their site to look differently. This is usually achieved through “themes”, and works reasonably well. One problem is that each theme introduces a new opportunity for bugs, version skew, compatibility mismatch, and the like. I don’t know how to achieve this, but something like unit tests for themes would be highly desirable, so that users (and theme authors) can be confident that things work as promised and as expected.

Because customization and extensibility are so important to the matter at hand, an extensible application architecture or a plugin facility are desirable. Hugo, as a compiled application, is at a disadvantage here. The consequence is that an increasing amount of custom and user-space logic is moving into the templates, which — as probably everyone who has worked with a templating system will agree — is the wrong place for it.

Not As Easy As It Seems

Overall, I have begun to appreciate the complexity of the problem space. On the face of it, this is not obvious: read some input, apply some templates, write some HTML — how hard can that be? Turns out pretty hard, in particular if you want to cover not just the “easy 90 percent”, but a sufficient range of edge cases also, provide for the necessary customizations, and be reasonably convenient to use.

I think it is very easy to underestimate the complexity involved in developing a comprehensive, general SSG. Some of the shortcomings in existing tools seem pretty directly attributable to insufficient understanding of the problem space, followed by a series of ad-hoc fixes for each new difficulty, edge case, or non-anticipated use case. For example, I am certain that Hugo’s (apparent) difficulty can be explained by its convoluted development: every time an unforeseen, but required feature was discovered, the developers added yet another ad-hoc mechanism to support it (without ever stepping back to really rethink the processing model). That’s how we got to the confusing (and confused) state we have today.

For anyone who wants to rise to the challenge and add to the existing 350 (!!!) static site generators already listed on the JamStack website I would like to offer three pieces of advice:

-

Think, hard, about the processing model. Do not assume that it can all be put together with a little bit of simple glue code. In fact, I believe it might be worth to take a look at a book on compiler design. Some of the problems (parsing, internal representation, output generation) are very much the same.

-

Invest in a proper data model for the meta-data. Don’t treat meta-data handling as an afterthought, and do not scatter meta-data handling throughout the code base.

-

Think in terms of “tool set”, rather than one monster application. Things like uploading to a hosting provider or Netlify are best left to a separate, specialized tool. Sure, one can provide a nice, unifying wrapper around all that, but there should be a clear division of labor behind the scenes. (Again, compiler writers have shown the way.)