Transferring Files in a Local Linux Network

Sharing files across computers on a local, “informal” (home) network is a recurring desire. Yet, at least in the Linux world, one that does not have an obvious, canonical, default solution. Email, cloud storage, or using an USB stick all seem shockingly common.

Here, then, is a list of direct network transfers — without email, without roundtripping through cloud storage, and without using a USB stick. I have tried out most, but not all these methods on my own systems; but I do try to describe the essence of each method.

All of the examples require that the appropriate ports are open on the

local firewall (typically ufw). This is easily forgotten, in

particular for one-off file transfers using netcat or HTTP.

Conventions

In the following, it will make sense to identify one of the two

hosts as the source src and one as destination dst. The usual

distinction between client and server (or local and remote) is less

useful in the current scenario, because on a local network, one typically

has equal access to both computers.

In the commands below, src and dst will have to be filled in with

an IP address or a local hostname. Also, the filesystem paths will, of

course, have to be adjusted.

netcat

The ultimate one-off. Great for single files, and to gain low-level Unix street cred.

# on dst:

nc -l 8088 > outfile

# on src:

nc -N dst 8088 < infile # replace "dst" with hostname or address

The listener (-l flag) must be set up before trying to send. The

-N option instructs the sender to terminate the connection when

encountering an EOF.

Notice that the port is not concatenated to the host name or address

using a colon, but is given as a separate argument. (You may also use

the -p flag to indicate the port.)

HTTP

A regular HTTP web server.

# on src:

cd /path/to/directory

busybox httpd -f -p 8088

# on dst:

wget src:8088/filename # replace "src" and "filename" as appropriate

I have found the busybox HTTP server to be smoother and faster than the

Python built-in (which is available through: python3 -m http.server 8088).

The -f flag keeps the process in the foreground; stopping the process

stops the service.

Probably my favorite solution for unidirectional file transfer: simple and familiar.

scp

If the ssh daemon is running on at least one of the boxes, it can be used to transfer files.

# if sshd is running on dst

# on src:

scp /path/to/file username@dst:/path/to/destination/

# if sshd is running on src

# on dst:

scp username@src:/path/to/file .

This solution will typically require passwords or authentication keys to be set up. Handy if sshd is already running on any of the local machines.

rsync over ssh

The rsync utility typically utilizes ssh for remote connections and can be used whenever the ssh daemon is running on at least one of the hosts. Syntax and authentication requirements are essentially the same as before.

# if sshd is running on dst

# on src:

rsync /path/to/file username@dst:/path/to/destination/

# if sshd is running on src

# on dst:

rsync username@src:/path/to/file .

Useful to transfer entire directories, and to update a remote directory incrementally (rather than transferring all of it from scratch) — and much more: rsync is a big topic all to itself.

Because rsync can transfer not only files but entire directories, it may be fickle in regards to the presence or absence of the trailing slash on path specifications!

rsync as daemon

It is also possible to run rsync as daemon (server). This requires some explicit configuration, and a different location syntax (double colons, instead of single colons). The rsync daemon offers no encryption, which may be acceptable on a local network, but security is nevertheless a concern: it is not a service one may want to keep running perpetually without additional hardening.

rclone (not really)

The rclone program is a frontend (client) for a wide selection of cloud storage backends. It is not a server, and hence cannot serve files on a local network. It can, however, be used as a client for one of the protocols that may run on a local network. In particular, it supports both HTTP and SFTP.

Warpinator

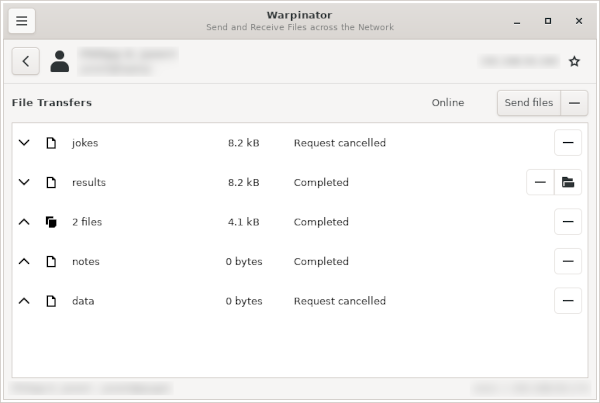

Originating on Linux Mint, warpinator uses the avahi (zeroconf) service to discover compatible services on the local network. It is a GUI-driven program to exchange files primarily with a participating user (not a host). The intended usage scenario has two users, who need to exchange some files, essentially sitting next to each other. Warpinator is optimized for that kind of immediate data exchange, between users who can directly communicate with each other.

In the GUI, the sending user selects a file or directory to send. On the receiver’s side, the user sees a message, similar to a chat message, that a file is “waiting for approval”. Once explicitly allowed by the receiver, the file or directory is downloaded to a (configurable) target directory. (It is also possible to configure automatic acceptance of incoming transfers.)

All participating computers must run warpinator (of course), and allow traffic on the required ports in their firewalls. On a Mint desktop environment, warpinator integrates directly with the gufw firewall GUI; under other window managers, it may be necessary to invoke gufw separately.

Authentication in warpinator is handled via a “Group Code”: essentially, a shared password that is expected to have been communicated offline beforehand.

The need for explicit configuration is minimal. Once running, the program keeps track of available connections, but I have found it on occasion necessary to reset the program to make it recognize some recent change in the network.

Unofficial implementations exist for Windows, Android, and iOS.

Syncthing

Syncthing is quite similar to warpinator, except that it takes the user out of the loop. Syncthing recognizes one or more directories, which it then keeps synchronized across all configured hosts: changes to the directory’s contents on one host are immediately propagated to all other hosts.

Syncthing does not use automatic discovery: every host must be told explicitly about every other host. Authentication is accomplished via a (lengthy) cryptographic hash, which is available as a QR code, but sharing it with a device that does not have a camera can be challenging.

Overall, the configuration options and overhead are somewhat greater than for warpinator. Syncthing includes a Web UI, as well as a standalone GUI tool.

The typical installation package does not set up syncthing to be automatically started by systemd, but it does provide all the required configuration files. It also includes scripts to configure a local firewall as required.

Whenever there is shared access to a common resource, there is the issue of conflicting writes. Syncthing solves this problem not by locking, but by creating two copies of the resource, distinguished by timestamps added to their names. Merging the copies back into a single master is left to the users. This may be less of a problem in practice, but it is something to be aware of.

Overall, syncthing feels more “robust” than warpinator. Whether this is just an ironic side-effect of the more painful configuration process is hard to determine.

nfs and smb

Finally, I should mention nfs and smb, two fully distributed filesystems, which make it possible to share not only files, but entire directory trees, permissions, and attributes over a network. Configuring and administering either is not trivial, and most likely overkill for an informal home network.

Another system, which allowed mounting of a remote directory over ssh is sshfs, but this project is at this point no longer maintained.

Conclusion

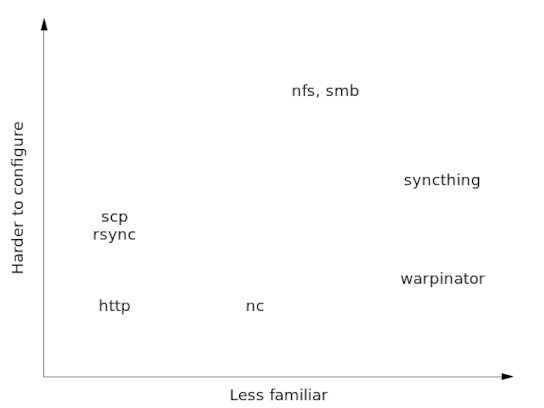

So, what gives? I am not sure — none of these methods seems to win hands-down, and none is sufficiently widely and exclusively accepted that it would win by default.

Netcat and HTTP are simple and straightforward. Suitable for ad-hoc one-offs, but hardly ideal for more permanent set-ups.

Maybe the best general-purpose compromise between familiarity and robustness comes from solutions utilizing ssh. One can argue that, even in a local network, it makes sense for all hosts to run Secure Shell as a service. Once authentication keys have been set up, file transfers, using either scp or rsync, are then transparent yet reliable.

By contrast, warpinator and syncthing are more specialized solutions and designed for rather specific usage patterns.

Warpinator feels both slick and hokey at the same time. The chat-like user experience seems like an odd processing model for filesystem operations. I also don’t know how secure warpinator is, and its relative obscurity does not bestow particular confidence in this area. In fairness, it is only intended for a specific usage pattern: two people, sitting next to each other, who want to exchange a couple of files. They both start Warpinator, agree on a pass phrase, perform the data exchange, and then shut the program down again. For that specific situation, Warpinator is indeed a convenient solution. Just keep in mind that it is not really intended to be kept running in the background perpetually.

Syncthing is intended for almost exactly the opposite scenario: a group of hosts that need permanent access to a shared set of resources. And for that, Syncthing does seem neat: it essentially replicates the functionality of a network file system, but with much less configurational overhead. The problem is that it implements a different paradigm: shared access to a common resource, rather than the transfer of a copy from one owner to another. Although it can be used for file transfers, its real application areas lie elsewhere. Also, it is sufficiently complex that it strikes me as overkill for local file transfers — I would seriously consider it, though, for setting up a shared team or project workspace.